Trustworthy Research for Understandable, Safe, Technology

The TRUST Lab at Duke University conducts research in applied AI explainability, technology evaluation, and adversarial alignment to ensure AI systems are transparent, safe, and beneficial for society.

Research Areas

Our interdisciplinary team tackles challenges in developing trust in technology.

Applied Explainability

Applying AI explainability methods to real-world problems.

Technology Evaluation

We focus both on the technical evaluation/benchmarking of AI systems and on the assessment of the societal impact of emerging technologies.

Adversarial Alignment

Using adversarial techniques to better explain AI systems.

Current Projects

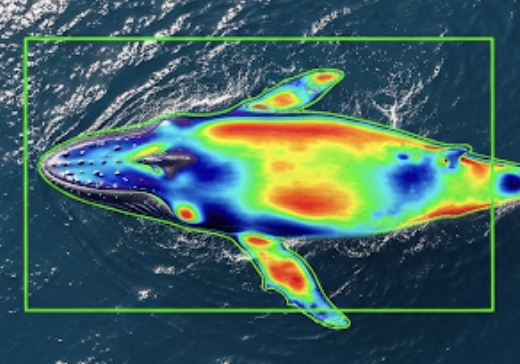

Explainability in Conservation

We are applying state of the art computer vision approaches and explainable machine learning techniques to support wildlife conservation efforts. This interdisciplinary project bridges machine learning with ecological science to create transparent decision-making tools.

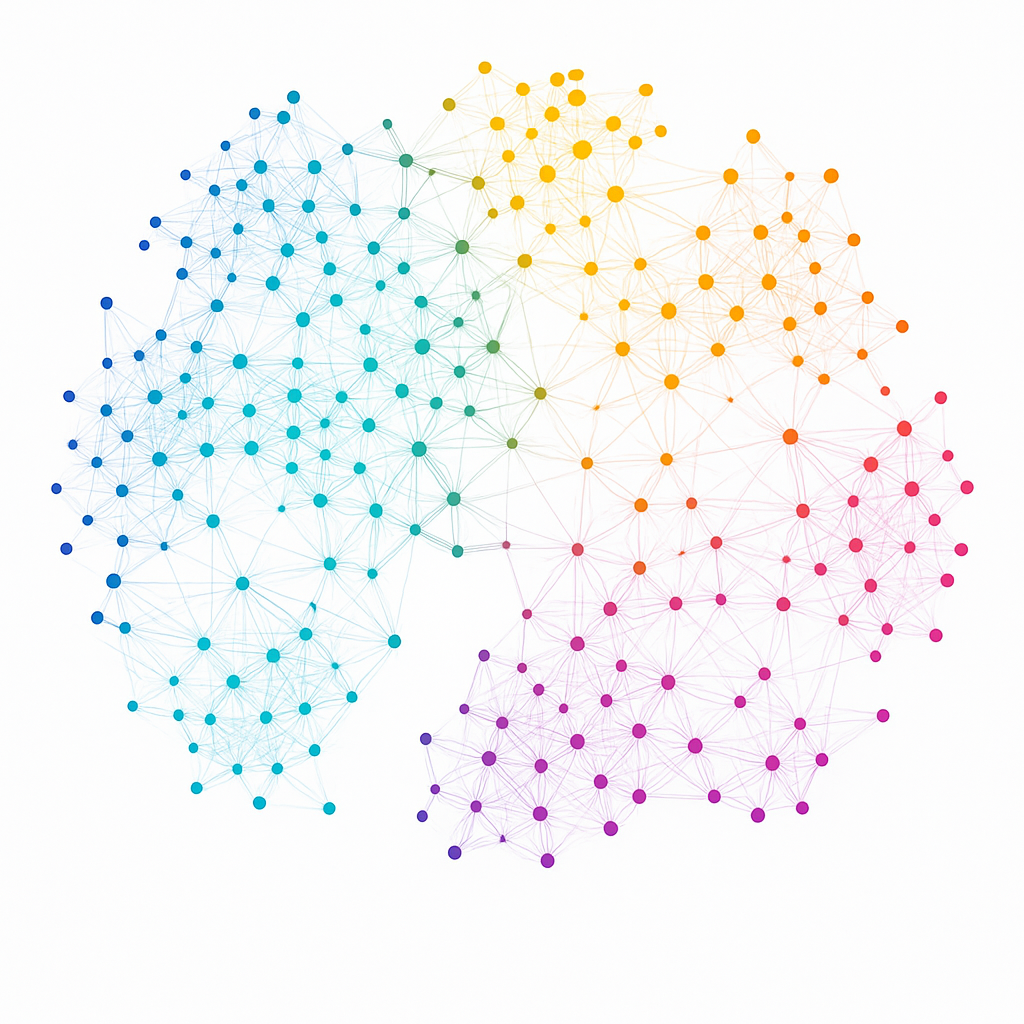

Exploring Geolingual and Temporal Components of AI Embeddings

This project investigates how large embedding models encode geographical and temporal information, with implications for understanding cultural biases and historical shifts in AI systems.

Consilience: AI in Interdisciplinary Research Augmentation

This study explores how voice-based, conversational LLM agents can function as “research translators” in interdisciplinary collaborations.

Explainable and Adversarially Robust Sleep Monitoring

This project addresses gaps in responsible AI for digital health by developing explainable and adversarially robust machine learning models for sleep monitoring.

Adversarial Alignment in Large Language Models

We aim to turn the “bug” of adversarial attacks into a feature for improving AI transparency, trustworthiness, and alignment with human goals. In this project, we are developing an open-source adversarial probing platform for LLMs.

Recent Publications

Jiayi Zhou, Günel Aghakishiyeva, Saagar Arya, Julian Dale, James David Poling, Holly Houliston, Jamie Womble, Gregory Larsen, David Johnston, Brinnae Bent

NeurIPS Workshop on Imageomics (accepted) • 2025

Photorealistic Inpainting for Perturbation-based Explanations in Ecological Monitoring

Günel Aghakishiyeva, Jiayi Zhou, Saagar Arya, Julian Dale, James David Poling, Holly Houliston, Jamie Womble, Gregory Larsen, David Johnston, Brinnae Bent

NeurIPS Workshop on Imageomics (accepted) • 2025

Featured Videos

Watch our latest presentations and research overviews

Responsible AI Symposium

Our recent symposium, hosted at Duke University, introduces society-centered AI

Adversarial Alignment

Dr. Bent introduces the AI 2030 audience to Adversarial Alignment

Get in Touch

Interested in our research? We welcome collaborations, inquiries from prospective students, and partnerships with industry and academia.